NetApp collaborating with NVIDIA and partners at GTC Fall to accelerate enterprise AI adoption

Hoseb Dermanilian

NVIDIA GTC is happening October 5-9, 2020, bringing the premier artificial intelligence (AI) and deep learning conference to audiences around the globe. NetApp is a regional platinum sponsor of this event and will be featuring solutions around NVIDIA AI technology, including a just-released NetApp ONTAP AI reference architecture utilizing NVIDIA DGX A100, the universal system for all AI workloads, offering unprecedented compute density, performance, and flexibility in the world’s first 5 petaFLOPS AI system. It’s going to be an exciting event. Don’t miss NetApp’s cutting-edge sessions on the latest topics in enterprise analytics and AI.

The upcoming event is both digital and global, as GTC will run continuously across all time zones for five straight days, covering all the latest innovations for AI, HPC, graphics, accelerated data science, networking and more. You can attend live events in the time zone of your choice, with unique opportunities to interact with brilliant minds and influential leaders from a variety of industries no matter where you are. Sessions will also be available on-demand.

Modern, data-driven businesses have to manage a torrent of data. Most organizations know they need to invest in AI to harness the value of this data, and many are seeking to build a strategy and platform that can enable success.

NetApp is showcasing a number of technologies and partnerships at this year’s event focused on streamlining data management and increasing I/O performance. This includes ONTAP AI utilizing the DGX A100, streamlined MLOps with our AI Control Plane, and our latest collaborative efforts with SFL Scientific, a strategic partner for both NetApp and NVIDIA. We’ll also be hosting a range of sessions tailored specifically for North America, EMEA, and APAC audiences.

New ONTAP AI solution integrates NVIDIA DGX A100

The proven architecture of the NVIDIA DGX POD and the recently released DGX A100 system will help you overcome enterprise AI challenges with an end-to-end architecture that enables your teams to combine analytics and AI workloads. Data scientists and developers are freed to focus on productive experimentation and prototyping instead of software engineering, systems integration, and troubleshooting.

When NVIDIA announced the DGX A100 back in May, NetApp committed to quickly deliver new DGX POD architectures utilizing the DGX A100. These reference architecture solutions incorporate the design principles and best practices that enable maximized AI performance at scale. Our just-released reference architecture NVA design guide details the operation of NetApp ONTAP AI integrating DGX A100 in one-, two-, and four-node configurations.

DGX A100 combines the power of eight NVIDIA Tensor Core A100 GPUs with six NVIDIA NVSwitch network interconnects, two 64-core AMD CPUs, and 1TB of system memory. NetApp ONTAP AI with DXG A100 systems combines industry-leading compute capabilities with the latest NVIDIA Mellanox HDR 200Gb InfiniBand and 200GbE network fabrics and NetApp AFF storage for high-performance I/O and advanced data management. ONTAP AI solutions are capable of supporting all your AI workloads at scale, including analytics, training, and inference, making them perfect for the enterprise data center.

Proven scalability and performance

NetApp has extensively validated the performance of these systems using a variety of benchmarks to stress test various NVIDIA and NetApp components. In addition, a number of real-world deep-learning benchmarks were run to validate system performance and scaling.For example, MLPerf is an industry-standard benchmark for deep learning infrastructure. Our test used ResNet-50 along with the ImageNet dataset to validate training performance. As the figure below shows, images/sec for ONTAP AI with the DGX A100 increased linearly as the number of DGX nodes scaled from one to two to four and demonstrated great consistency across epochs.

Testing of eight-node systems is still ongoing. Results will be added to the reference architecture as they become available.

See the NetApp GTC Sessions below for opportunities to learn more about ONTAP AI and DGX A100.

MLOps with the NetApp AI Control Plane

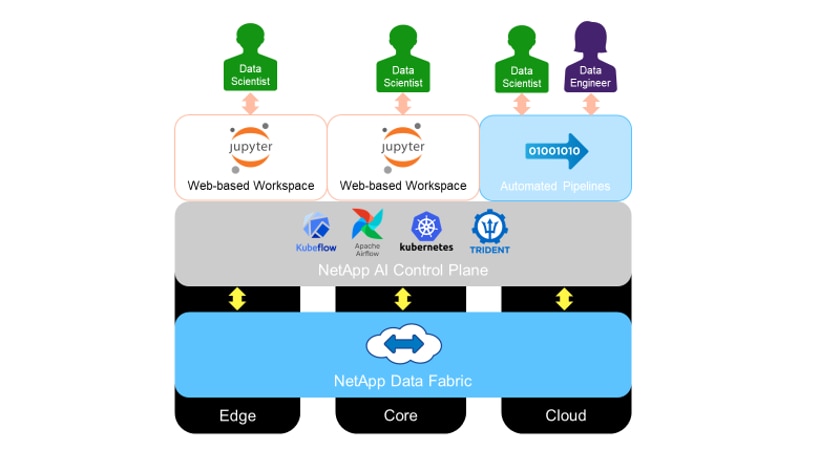

Machine Learning Operations or MLOps is the confluence of AI development and DevOps. The goal of MLOps is to allow AI teams to create development and model training workflows that are industrial grade, streamlined, and enterprise ready. Unfortunately, in too many organizations data scientists spend most of their time on non-data-science work. ML development too often relies on ad hoc software, plug-ins, scripts, and a myriad of siloed tools that make ML development complex.The NetApp AI Control Plane is NetApp’s full-stack AI data and experiment management solution, providing extreme scalability, streamlined deployment, and nonstop data availability. The AI Control Plane makes it possible to quickly build an AI pipeline and integrate it with your DevOps pipeline.

The NetApp AI Control Plane integrates open source technologies including Kubernetes and Kubeflow with a data fabric enabled by NetApp, which provides uncompromising data availability and portability across on-premises, public cloud, and hybrid cloud environments.

The NetApp AI Control Plane integrates open source technologies including Kubernetes and Kubeflow with a data fabric enabled by NetApp, which provides uncompromising data availability and portability across on-premises, public cloud, and hybrid cloud environments.

The NetApp AI Control Plane addresses the data management needs that come with MLOps. Data scientists no longer have to wait for copies of datasets, and your organization no longer has to dedicate massive amounts of costly high-performance storage to store many copies of the same data. The headaches and risks that result from trying to track changes across multiple different versions of the same dataset simply disappear.

See the NetApp GTC Sessions listed below for opportunities to learn more about the NetApp AI Control Plane.

Ideation workshops with SFL Scientific

SFL Scientific is a data science consulting firm focused on strategy, technology, and solving business and operational challenges with AI. SFL's capabilities range from developing an AI strategy to building custom AI applications at scale. With a globally connected network of technology and cloud partners, SFL Scientific's core services include leading cross-functional efforts across business, IT, and operations.NetApp and NVIDIA are working with SFL Scientific to offer AI workshops tailored to accelerate AI adoption, and deep dive into organizations use cases. These workshops can help you connect AI technology to business value. Topics include:

- AI use case exploration and prioritization

- Data strategy to support use cases

- Infrastructure/architecture recommendations

- Data quality and risk identification

To find out more about these workshops, contact info@thinkbrg.com or call +1 (617) 804-0002.

NetApp GTC sessions

NetApp will be offering a total of 12 sessions during GTC:- Americas: 3 sessions

- EMEA: 3 sessions

- APAC: 6 sessions, 3 in Japan and 3 in Korea

Common sessions for each region

The following two sessions will be offered in each geography:Session A22260: NetApp ONTAP AI with NVIDIA DGX A100 Presenters: David Arnette, NetApp; Jacci Cenci, NVIDIA This session covers the updated ONTAP AI reference architecture with NVIDIA DGX A100 systems, with an overview of the ONTAP AI infrastructure, DGX A100 systems, and the NetApp AI Control Plane for managing data science workflows on ONTAP AI. We'll also briefly cover other updates to the NetApp AI portfolio including EF-series with DGX A100 and NetApp StorageGRID for object storage data lakes.

Session A22323: NetApp AI Control Plane—AI Data Management Across a Hybrid Cloud Presenter: Mike Oglesby, NetApp As organizations increase their use of AI, they face two major challenges – data availability and workload scalability. In this session, we will describe how AI workloads and petabytes worth of training data can be seamlessly scaled together across GPU nodes and regions through the use of NVIDIA DeepOps, Kubeflow, Apache Airflow and the NetApp AI Control Plane. These tools facilitate the rapid provisioning of GPU-accelerated data science workspaces and jobs, the efficient maintenance of dataset and model versions at the data science experiment level, and the automation of data ingestion from a variety of sources across disparate environments. The end result is less idle time for GPUs and data scientists, and an increase in data availability leading to more accurate models.

Special sessions

We are also offering exclusive sessions in each region:Session A22433 (Americas): NetApp AI for Healthcare Presenters: Esteban Rubens, Rick Huang, NetApp; Abood Quraini, NVIDIA This session offers guidelines for customers building AI infrastructure using NVIDIA DGX systems and NetApp AFF storage for healthcare use cases. It includes information about the high-level workflows used in the development of deep learning models for medical diagnostic imaging, validated test cases, and results. We’ll also cover the latest features of NVIDIA Clara Train V3 and how NetApp collaborated with NVIDIA to assist researchers in the fight against COVID-19.

Session A22521 (EMEA): Developing Automotive AI with NetApp and NVIDIA Technology Presenters: Christian Ott, Bob Nagy, NetApp; Tom Westendorp, NVIDIA When you mention the idea of applying AI in the automotive sector to someone, their first thought is self-driving cars. It’s estimated that the leading thirty companies have invested $16 billion into building a car that can take itself from A to B, and, as we all know, that technology has yet to arrive. This session will discuss the complexity of the task of autonomous driving and describe additional applications for AI in Automotive, including the challenges from a data management perspective and how NetApp and NVIDIA are working to solve the problems together.

Session A22585 (Japan): Data Driven AI for Financial Services Presenter: Deepth Dinesan, Director AI, Robotics, and Quantum Computing, NetApp Recent advances in machine learning, deep learning, reinforcement learning, and quantum machine learning are fundamentally changing the competitive landscape in financial services and opening the door to new opportunities. Scalable, data-driven architectures are turbocharging machine intelligence to classify, predict, and make autonomous decisions in real time, creating new capabilities and disrupting the current dominant ones. This session will focus on data-driven architectures to enable scalable and rapid deployment of AI applications within financial services firms, including customer case studies. Session A22526 (Korea): Best practices for Business Innovation with NetApp ONTAP AI Presenter: Eun-Seob Kim, Solutions Engineer, NetApp Korea, APAC This session explains the key technical requirements that AI storage must provide in AI infrastructure environments and describes the ONTAP AI reference architecture created by NetApp and NVIDIA. Additionally, we will share examples (domestic and overseas) of business innovation through ONTAP AI, including AI for healthcare, enterprise companies' deep learning, and AI infrastructure for machine learning.

More information and resources

To learn more about the full range of NetApp AI solutions, visit netapp.com/ai.Check out these additional resources to learn more about solutions described in this blog and other NetApp AI solutions.

- NetApp ONTAP AI solution brief

- NetApp ONTAP AI with NVIDIA DGX A100 Systems design guide

- NetApp AI Control Plane solution brief

- NetApp AI Control Plane technical report

- NetApp EF600 All-Flash Array with NVIDIA DGX SuperPOD solution brief

- NetApp StorageGRID Data Lake for Autonomous Driving Workloads technical report

Hoseb Dermanilian

Hoseb joined NetApp in 2014. In his current role, he manages and develops AI and Digital Transformation business globally. Hoseb's focus is to propose and discuss NetApp's value add in the AI and Digital Transformation space as well as helping customers build the right platform for their data driven business strategies. As part of the business development, Hoseb is also focused on developing NetApp AI channel business by recruiting and enabling the right AI ecosystem partners and enabling Go-To-Market strategies with those partners. Hoseb is coming from a technical background. In his previous role, He was the Consulting System Engineer for NetApp’s video surveillance and big data analytics solutions. Hoseb holds a Masters degree with distinction in Electrical and Computer Engineering from the American University of Beirut and he has multiple globally recognized conference and journal publications in the field of IP Security and Cryptography.