NetApp IT Perspective: StorageGRID F5 Load Balancer Design Considerations

Yahshanulla Syedshaw

One of my roles as a member of the NetApp® IT Customer-1 program is to implement, design, and architect NetApp StorageGRID® for our object storage requirements. Our StorageGRID deployment consists of multiple Storage Nodes and is deployed across many sites. The Storage Nodes provide service endpoints for applications and manage the storage, replication, metadata, and so on. A properly designed load balancer is required to seamlessly direct clients to the optimal Storage Node so that any node failure, or even an entire site, is transparent.

Below are three design descriptions that may help you with load balancing with StorageGRID. I explain the use of F5 Local Traffic Manager (LTM), the Global traffic manager (GTM), and the possible failure of using the GTM. I also propose a global design that will help avoid interruptions. All descriptions are high level.

StorageGRID F5 LTM Design

The F5 LTM (Local Traffic Manager) is a Full Proxy load balancer. The F5 LTM terminates client connections (virtual server) and initiates separate connections using Source NAT. StorageGRID will see traffic from the SNAT IPs. To perceive actual client IP address, use X-Forwarded-For Option in the HTTP profile. Note that with the SSL termination in the F5 LTM, it is obligatory to insert X-Forwarded-For in HTTP header. The following design is one of industry standard Server Load balancing (One-Arm Mode).

The general guidelines for StorageGRID F5 LTM implementation are:

- Persistence is not required with the nature of object storage.

- Choosing between SSL termination, pass through, or end-to-end encryption will depend on the requirements. Note that SSL Termination at LTM will reduce resource overhead in the StorageGRID nodes.

- Use dedicated HA Vlan for Config Sync and Failover actions.

- SNAT can be dedicated SNAT IP or reuse SELFIPs from INT VLAN.

- SNAT mode deployment is NOT mandatory for StorageGRID deployment.

StorageGRID F5 GTM Global HA Design

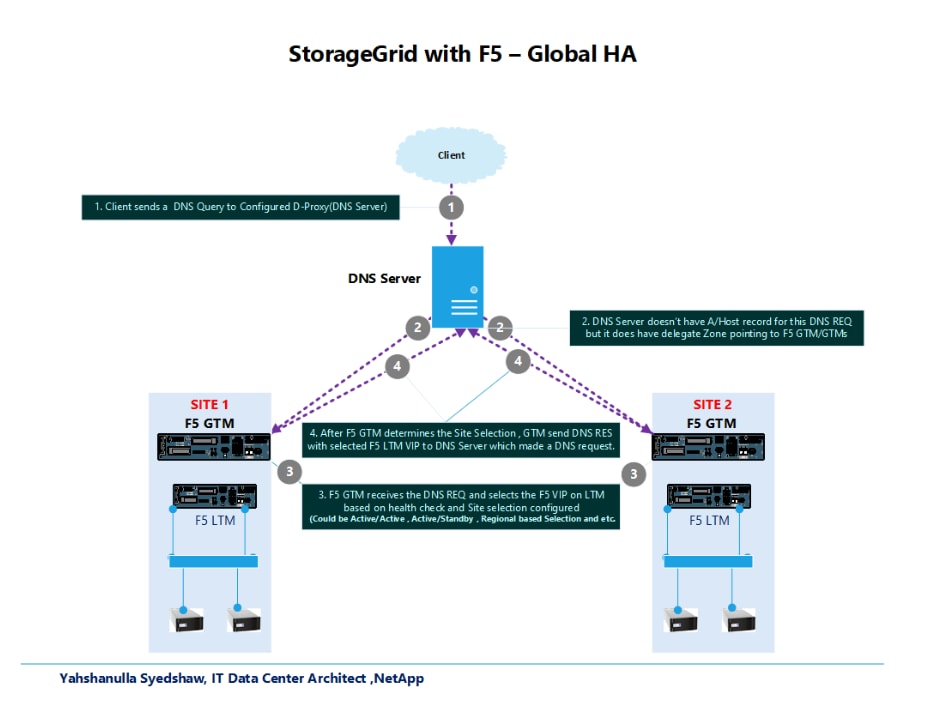

The F5 GTM (Global Traffic Manager) is a smart and secure DNS server that will do smart DNS load balancing and can be incorporated with existing F5 LTMs in the data center. The following high-level design diagram explains how GTM works.

It is important to know that implementing GSLB (Global Server Load balancing) for StorageGRID needs to be analyzed and implemented carefully because it can cause cross-site/cross-data center file uploads in the case of an isolated StorageGRID failure at one site. It will likely result in application delays, end user complaints, weighty bandwidth utilizations, and more. The bottom line is to be extra cautious as unexpected behaviors and results can arise like mentioned below.

In the above scenario, StorageGRID VIPs and DNS FQDN were configured with GSLB solution in the StorageGRID layer. In case of failure in the StorageGRID nodes or related to the NW or SG VIP F5 layer, the front-end application would send DNS requests to GTM. The GTM may point to Site B thus causing the application to start uploading files to Site B. This may cause extra delay in uploads, unexpected behaviors, choking the bandwidth between data centers, and more.

In the event of failure at one site caused by StorageGRID, NW, or F5 related, one way to resolve the problem is to implement GSLB in front-end App Layer. Place the App Layer behind F5 LTM so that the GSLB decision will be easy using LTM availability; then add the StorageGRID virtual server as DEPENDENCY in GTM (GSLB). This is important to consider in case the StorageGRID nodes are isolated outages caused by expected downtime, i.e. upgrades, planned maintenance, or unexpected downtime that would trigger failover to Site-B entirely. The recommended design is below.

In the design above, each site Front End Application layer is configured with a respective site StorageGRID F5 VIP FQDN (not GSLB FQDN). This allows the controlled flow between application and StorageGRID nodes. The Front End Application layer needs to be configured with F5 GSLB solution and the StorageGRID VIPs added as dependency in health check for ensuring high availability between sites.

Add Dependency in GTM to Avoid Interruption

I highly recommend adding the StorageGRID virtual server as a dependency in the Front End App layer GSLB. This way any isolated StorageGRID failures will kick off the failover and entire application stack will failover to other available sites with minimal or no interruption.

I hope you find these design considerations helpful in your efforts to deliver object storage capacity and performance across locations that are geographically distributed.

The NetApp-on-NetApp blogs feature advice from subject matter experts from NetApp IT who share their real experiences using NetApp’s industry-leading data management solutions to support business goals. Visit www.NetAppIT.com to learn more.