New NVIDIA DGX A100-Based Solutions from NetApp Enable AI for the Enterprise

Santosh Rao

Enterprises are struggling to build out the infrastructure they need to tap into the power of AI at scale. Maintaining separate silos of data center AI infrastructure to address analytics, training, and inference workloads creates complexity, drives up costs, and constrains performance. The new NVIDIA DGX A100 system announced today is designed to unify AI workloads for training, inference and data analytics on one platform, simplify infrastructure, and accelerate ROI. DGX A100 is a universal building block for data center AI, supporting DL training, inferencing, data science and other high-performance workloads from a single platform.

Enterprises are struggling to build out the infrastructure they need to tap into the power of AI at scale. Maintaining separate silos of data center AI infrastructure to address analytics, training, and inference workloads creates complexity, drives up costs, and constrains performance. The new NVIDIA DGX A100 system announced today is designed to unify AI workloads for training, inference and data analytics on one platform, simplify infrastructure, and accelerate ROI. DGX A100 is a universal building block for data center AI, supporting DL training, inferencing, data science and other high-performance workloads from a single platform.

Built using the new NVIDIA Ampere architecture, the DGX A100 system delivers up to six times the training performance of the prior generation, delivering the equivalent of a data center of compute infrastructure for analytics, training and inference, now consolidated in just a single system.

NetApp ONTAP AI will be among the first converged AI stacks to incorporate the DGX A100 and NVIDIA Mellanox networking.

“NetApp and NVIDIA have been collaborating for several years to deliver AI solutions that help enterprises accelerate AI adoption. Both companies are working on eliminating AI bottlenecks and advancing the realm of possibilities,” says Kim Stevenson, Sr. Vice President and General Manager, Foundational Data Services Business Unit, NetApp. “NetApp’s full stack AI/ML/DL platforms delivered at the edge, core and cloud with ONTAP AI complements NVIDIA’s rapidly expanding ecosystem of AI hardware, software, and development toolkits.”

“Enterprises are eager to move their AI projects more quickly from concept to production, and our collaboration with NetApp helps customers rapidly accelerate their AI adoption,” said Manuvir Das, Head of Enterprise Computing at NVIDIA. “Combining the power and capabilities of DGX A100, Mellanox networking, and NetApp cloud-connected storage, ONTAP AI provides an advanced, flexible platform for leading innovation in AI and data science.”

NetApp AI Platforms are DGX A100 Ready

NetApp and NVIDIA are collaborating closely on solutions that combine the benefits of DGX A100 and NetApp’s proven solutions for high-performance storage and advanced data management, including:- NetApp ONTAP AI. This market-leading joint solution—combining NVIDIA DGX systems and NetApp AFF storage—was introduced in 2018 and has been deployed at over 70 customer sites across the globe.

- NVIDIA DGX SuperPOD. NetApp joined with NVIDIA to introduce DGX SuperPOD solutions in November 2019 at SC19.

DGX A100 Ready ONTAP AI Solutions

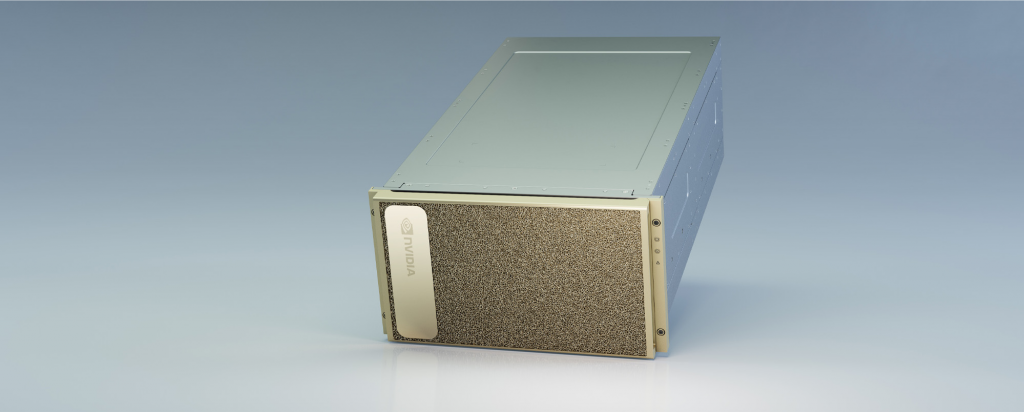

NetApp ONTAP AI architectures utilizing DGX A100 will be available for purchase in June 2020. ONTAP AI verified architectures combine industry-leading NVIDIA DGX AI servers with NetApp AFF storage and high-performance Ethernet switches from NVIDIA Mellanox or Cisco. Available options will include 2-, 4- and 8-node configurations. KAON 3D model of ONTAP AI configuration with DGX A100, A800 and Mellanox SN2700.

KAON 3D model of ONTAP AI configuration with DGX A100, A800 and Mellanox SN2700.

ONTAP AI lets you simplify, accelerate, and scale the data pipeline needed for AI to gain deeper understanding in less time. NetApp AFF systems keep data flowing with the industry’s fastest and most flexible all-flash storage, featuring end-to-end NVMe technologies. AFF and a data fabric powered by NetApp enable you to create a data pipeline spanning from edge to core to cloud.

NetApp has released several reference architectures based on ONTAP AI targeted at use cases in specific industries:

- ONTAP AI Reference Architecture for Healthcare: Diagnostic Imaging

- ONTAP AI Reference Architecture for Autonomous Driving Workloads: Solution Design

- ONTAP AI Reference Architecture for Financial Services Workloads

ONTAP AI Solutions

NetApp has been actively expanding partnerships to deliver a broad range of AI solutions on ONTAP AI.We recently entered into a strategic relationship with Iguazio, combining the benefits of ONTAP AI with Iguazio’s data science platform for real-time machine learning applications. The combination provides enterprises with a simple, end-to-end solution for developing, deploying and managing AI applications at scale and in real-time on the ONTAP AI framework.

Iguazio’s integration leverages enterprise grade data management, data versioning, and NetApp Cloud Volumes for a seamless hybrid cloud experience. Tight integration with NVIDIA DGX enables customers to utilize GPU-as-a-Service and software containers from the NGC catalog.

NetApp has also created a solution with NVIDIA Clara Parabricks Pipelines that brings GPU-accelerated secondary analysis of next-generation sequencing data software to ONTAP AI. Core Scientific announced in March 2020 that it is providing free 30-day access to ONTAP AI and NVIDIA Parabricks for anyone needing to perform GPU-accelerated coronavirus research.

DGX SuperPOD

NetApp was one of the first vendors to support the DGX SuperPOD architecture and is a premier partner supporting the newest generation of NVIDIA DGX SuperPOD built on DGX A100. DGX SuperPOD with NetApp EF all-flash storage is a proven, validated solution that you can deploy with confidence. This fully integrated, turnkey architecture takes the risk out of deployment and puts you on the path to winning the race to AI leadership. DGX A100 brings new and faster NVIDIA NVLink and NVSwitch technology for multi-GPU communication to the DGX SuperPOD, along with nine NVIDIA Mellanox ConnectX-6 HDR InfiniBand adapters—each with two ports of 200Gb/s InfiniBand (HDR) and Ethernet connectivity with sub-600 nanosecond latency—to deliver the I/O bandwidth to take DGX SuperPOD solutions to the next level. InfiniBand delivers high bandwidth, low latency, and flexibility to support supercomputers running AI and other HPC workloads.

DGX A100 brings new and faster NVIDIA NVLink and NVSwitch technology for multi-GPU communication to the DGX SuperPOD, along with nine NVIDIA Mellanox ConnectX-6 HDR InfiniBand adapters—each with two ports of 200Gb/s InfiniBand (HDR) and Ethernet connectivity with sub-600 nanosecond latency—to deliver the I/O bandwidth to take DGX SuperPOD solutions to the next level. InfiniBand delivers high bandwidth, low latency, and flexibility to support supercomputers running AI and other HPC workloads.

NetApp EF600 all-flash NVMe storage complements the capabilities of the DGX A100 in SuperPOD configurations. The EF600 will add support for 200Gb NVMe over InfiniBand connectivity this summer (June 2020).

The EF600 delivers 2M sustained IOPS, response times under 100 microseconds, 44GBps of throughput, and 99.9999% availability. With DGX A100 architecture and the NetApp EF600, DGX SuperPOD will scale to new heights of speed and efficiency.

Flexible Consumption Options

A single DGX A100 system has a maximum power draw of 6.5KW. A rack with eight DGX A100 systems could potentially require up to 52KW of power. To ease deployment for enterprises, NetApp and NVIDIA are partnering with ScaleMatrix and DDC to offer convenient, end-to-end solutions that deliver the power and cooling required by high-performance infrastructure, and that can be deployed in non-data center locations.NetApp partners expand the range of available AI consumption models for DGX systems:

- ScaleMatrix. ScaleMatrix delivers ONTAP AI as a plug-and-play solution that can be deployed anywhere, providing a self-contained environment, with guaranteed air flow, integrated security, and fire and noise suppression. ScaleMatrix solutions are ideal for AI and other high-performance workloads, including edge inferencing in retail, healthcare, and manufacturing. Options include mobile (R-Series) and modular (S-Series) cabinets.

- Flexential. Flexential provides an ONTAP AI-Ready data center that makes accessing and acquiring AI infrastructure easier. Solutions are pre-optimized and ready to use. Choose from:

- ONTAP AI Test Drive. Try out ONTAP AI with no obligation and without the overhead of shipping or installation.

- ONTAP AI-Ready. Eliminate the burden of creating and maintaining an AI-ready data center by leaving it to the experts.

- Core Scientific and Equinix. Core Scientific recently announced its The Cloud for Data Scientists AI platform-as-a-service solution hosted in Equinix International Business Exchange™ (IBX®) data centers. Built on verified architectures combining NVIDIA DGX systems and NetApp all-flash storage, Cloud for Data Scientists is tuned to the demands of AI and deep learning.

How to Find Out More

If you want to learn more about DGX A100-powered ONTAP AI and SuperPOD configurations, NetApp and NVIDIA will be hosting a series of joint webinars, starting with:AI Beyond the Hype – Addressing Practical, High-Impact Health Challenges

- Date: Tuesday, May 19, 2020

- Time: 9:00 am – 9:45 am PT

- Speakers:

- Esteban Rubens, Healthcare AI Principal, NetApp (Moderator)

- David LaBrosse, Global Director, Emerging Technologies, NetApp

- Rahul Sathe, VP, Surgical Innovation, Cambridge Consultants

- George Vacek, PhD, Global Director, Sequencing Strategic Development, NVIDIA

- Date: Wednesday, June 17, 2020

- Time: 8:30 am – 9:30 am PT

- Speakers:

- Bob Nagy, Director, Technical Solutions, NetApp Global Automotive Industry

- David Arnette, Technical Marketing Engineer, NetApp

- Jacci Cenci, Senior Technical Marketing Engineer, NVIDIA

- Jarrod Puyot, Solution Architect, NVIDIA

More Information and Resources

To learn more about the full range of NetApp AI solutions, visit netapp.com/ai.Check out these additional resources to learn more about the solutions described in this blog:

Santosh Rao

Santosh Rao is a Senior Technical Director and leads the AI & Data Engineering Full Stack Platform at NetApp. In this role, he is responsible for the technology architecture, execution and overall NetApp AI business. Santosh previously led the Data ONTAP technology innovation agenda for workloads and solutions ranging from NoSQL, big data, virtualization, enterprise apps and other 2nd and 3rd platform workloads. He has held a number of roles within NetApp and led the original ground up development of clustered ONTAP SAN for NetApp as well as a number of follow-on ONTAP SAN products for data migration, mobility, protection, virtualization, SLO management, app integration and all-flash SAN. Prior to joining NetApp, Santosh was a Master Technologist for HP and led the development of a number of storage and operating system technologies for HP, including development of their early generation products for a variety of storage and OS technologies.