AI is boring - or is it?

Christian Ott

As you can tell from the headline, AI is boring. Allow me to elaborate.

As you can tell from the headline, AI is boring. Allow me to elaborate.

I’m using this slightly inflammatory headline, but my pain is real: The inflated use of the term artificial intelligence today is not doing the technology—or us—any good.

Our cars are intelligent (apparently), as well as our phones, even our vacuum cleaners. Only they aren’t. Certainly not in any way that we expect an artificial sentient being to be (expectations courtesy of Hollywood). Why then do many industries feel the need to label dumb things as intelligent? And why does this labeling have anything to do with the automotive and manufacturing industries?

One obvious reason for the overuse of the term AI is its coolness factor. Anything with an AI badge sells better than without. I’m guilty of falling for this labeling—my vacuum cleaner is proof. Although it’s amazing what this little device can do independently—find its way around the house, collect dust and the occasional Lego piece, replenish its resources—it still behaves quite silly quite often. When the tray is full, it sometimes tells me; other times it doesn’t. And it can’t tell the difference between a full tray and an obstructed airflow. Cue Chriz trying to empty an empty tray again, only to find that he needs to wrestle another Lego brick from the monster’s mouth. If the vacuum were indeed intelligent it would adapt, just like humans do. That is not happening, so why is AI such a big deal?

To answer that question, we need to take a step back.

The process of getting to a working algorithm through AI is totally stupid. We throw tons of data onto a decision process and give the machine a set of rules for how to determine success. (That explanation is super simplified—I don’t mean to diminish the absolutely groundbreaking research of the last decade. But for simplicity, let's accept this explanation for now.)

This process is not fundamentally different from the human learning process, only with many more cycles. As a kid, when I touched the hot stove, I quickly learned not to do that again. It took me maybe one or two iterations of trial and error. In contrast, the machine would have to burn itself a couple thousand times to learn that it’s doing a wrong move.

This analogy shows that AI is basically stochastic. It tries and fails until it succeeds. When this success happens is a game of input quality, learning capability, and resource availability. The point in time is almost impossible to predict. Again, this is a very simplified view of a highly complex and interesting field of science, but basically “the more data I throw at my machine, the more likely it will come up with something useful.”

What’s the big deal, then?

We have to remind ourselves that this kind of "teaching" a computer how to work was not possible just 10 years ago. So really, the last decade of digital transformation brought us to a massive watershed. Faster, more specialized processors and GPUs have emerged that can solve AI problems. And we can collect and manage the massive amounts of data that are the foundation of this problem- solving process. Today, we are capable of "teaching" our computers how to behave without writing everything into an "if … else … then" format. That right there is the big deal.

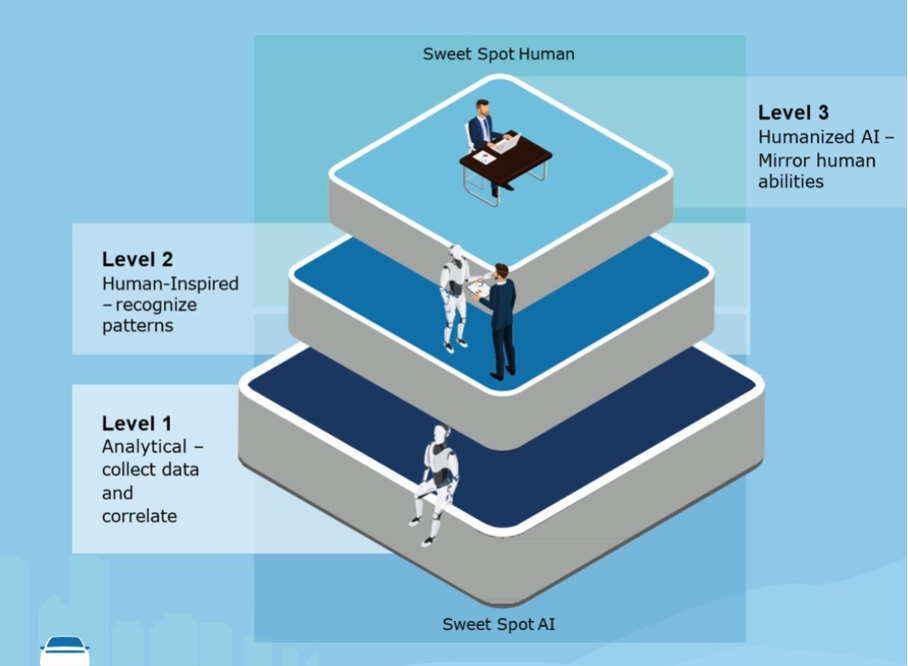

In the automotive and manufacturing industries, this capability opens many doors. Algorithms that drive cars autonomously will make serious accidents much less likely. Production quality will improve when faulty goods are identified in real time. Workplaces can be made safer and more efficient through advanced video surveillance. The use cases are virtually endless, but they can be categorized into roughly three areas, as shown in the following figure.

In this blog, we will look at level 1, where the entry barrier is lowest.

At the bottom of the pyramid, we find "simple" use cases like using video analytics for quality assurance of produced goods or finding potential error patterns in software code by correlating running installations up front. We use that approach with the smart digital advisor NetApp® Active IQ®. “Simple AI” is actually the best starting point for companies that are just setting off on their AI journey. It offers ROI very quickly, and most of the technology is ready to be used without lengthy up-front development.

At the bottom of the pyramid, we find "simple" use cases like using video analytics for quality assurance of produced goods or finding potential error patterns in software code by correlating running installations up front. We use that approach with the smart digital advisor NetApp® Active IQ®. “Simple AI” is actually the best starting point for companies that are just setting off on their AI journey. It offers ROI very quickly, and most of the technology is ready to be used without lengthy up-front development.

What’s stopping the adoption of AI, then? Well, the biggest challenge is not the lack of code or tools to develop algorithms. The problem is more fundamental—how to get the right data to the right place with the right attributes at the right time.

At NetApp we have years of experience with our own data pipeline. We used it to develop the Active IQ Digital Advisor tool and many successful customer installations to power AI. As shown in the following figure, we provide the data management from edge to core to cloud that customers need to feed their training algorithms.

Let’s take that piece by piece.

Edge- Collecting data at the edge

- Normalizing the data and ingesting it into the data pipeline, either as files or in object format enriched with metadata (e.g. Shopfloor 4.0)

- Moving the data to the core or the cloud for further processing

- Providing deep integration into data scientists’ development tools (read the blog)

- Modern and performant data lake solutions to manage the data throughout its lifecycle from ingest to archive

- Partnerships from startups to global integration partners to help with everything from tagging (SiaSearch) to full-service offerings (NetApp Keystone™)

- Providing a hyperscaler-independent link to all of the major cloud providers (Google, Microsoft, AWS, IBM, and others) to access AI capabilities in the public cloud (http://cloud.netapp.com)

- Archiving solutions in private or public clouds

In summary

AI may have simplified the work of the developer, reducing cost and risk on the manufacturer’s site. This is where the hype ends and the real value of AI emerges, especially in the automotive and manufacturing space. Being able to reduce time in software development and accelerate new business models that generate new streams of revenue should be the goals of the automotive industry players. (Otherwise, we’re looking at extinction – but that’s a topic for another day, so watch this space.)So, while I’m bored with the inflated use of AI labels, the concept is very relevant—its time has come. And funnily enough, it’s the simple use cases that get us on the path past early adoption. Our real-world AI challenges are no longer getting machines to develop code but giving them enough data in the right way to get them to write perfect code that covers all circumstances. The only way to do that is to provide them with more (relevant) data in the training phase.

Therefore, the true AI challenge is about data and its management at enterprise scale. The more integral AI becomes to your organization, the less it should be treated as a science project. It has to be part of core enterprise IT and deserves to be treated accordingly, so that your teams and algorithms can play with fire without burning down the house. Talk to me if you’d liketo discuss how NetApp and I can help with your AI projects.

To learn more about NetApp AI in the automotive industry, watch these on-demand videos.

Christian Ott

Christian Ott is the Director of technical sales for NetApp’s largest Automotive / manufacturing customers and has been with NetApp for more than 10 years. During this time, he held several positions in technical sales and management and was responsible for various customers in the semiconductor, manufacturing and automotive industries.