GTC Thursdays: Building Out an AI Center of Excellence

Santosh Rao

The world is undergoing an unprecedented digital shift in response to the COVID-19 pandemic. While the economic shock may be widespread, enterprises that best position themselves to satisfy emerging demands will fare the best.

The world is undergoing an unprecedented digital shift in response to the COVID-19 pandemic. While the economic shock may be widespread, enterprises that best position themselves to satisfy emerging demands will fare the best.

Increasingly, digital success depends on AI—in the form of intelligent chatbots and recommender systems, more flexible and efficient supply chains and distribution networks, enhanced product designs, and much more. No enterprise can afford to overlook the potential benefits.

And yet, IDC reports that a significant percentage of enterprise AI projects fail, and AI technology is not performing as expected. Lack of skilled staff and unrealistic expectations rank among the top reasons for this failure1.

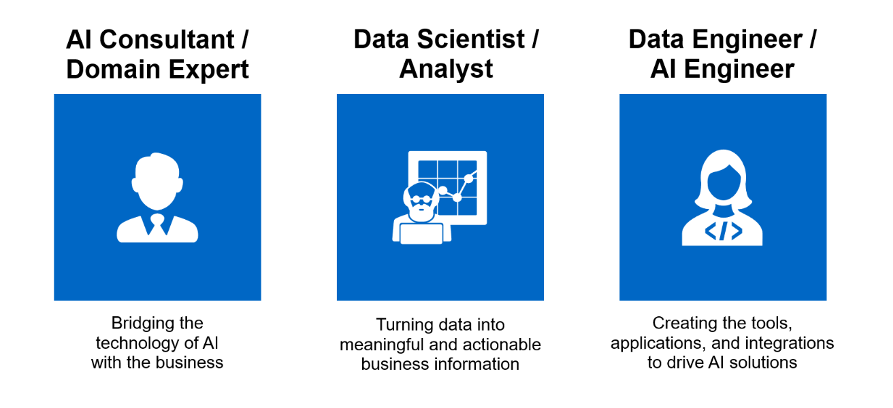

To help enterprises increase AI success, both NetApp and NVIDIA promote the idea of an AI Center of Excellence, an IT-led AI infrastructure platform accessible across the company that brings together people, processes, and technology.  By centralizing talent and democratizing AI technology access, this approach enables your teams to iterate faster, automate reproducibility, and deliver AI projects up to three months sooner with higher quality. In this blog, I want to explain how to think about and plan an AI Center of Excellence for your company.

By centralizing talent and democratizing AI technology access, this approach enables your teams to iterate faster, automate reproducibility, and deliver AI projects up to three months sooner with higher quality. In this blog, I want to explain how to think about and plan an AI Center of Excellence for your company.

Establishing an AI Strategy

The starting point for any AI Center of Excellence (CoE) is getting buy-in and establishing a strategy for the company:- Executive sponsorship. To succeed, a CoE will need the buy-in and sponsorship from one or more senior executives. It might be the Chief Information Officer (CIO), the Chief Data Officer (CDO), or VP of AI. In some cases, it may involve someone from the company’s Board of Directors.

- Goal and vision. It’s important to establish clear goals.

- Are you trying to establish thought leadership and pivot the entire company?

- Are you trying to create a new, transformative product or workflow that showcases AI technology?

- Are your goals strictly use-case driven?

- Narrow focus. The use cases of a single BU serve as the pilot for the CoE, narrowing the focus while technologies, processes, and personnel come up to speed.

- Broad focus. The CoE attempts to drive progress in parallel across multiple BUs, pooling resources, and working across diverse use cases to shepherd them from pilot to production.

Cataloging Data and AI Use Cases

Data is the “oil” of the digital economy. AI projects simply won’t succeed without access to the right datasets. One of the benefits that a CoE can deliver is an organized catalog of available datasets, both existing and new. In addition to existing company datasets like data extracted from corporate systems of record, production data, and customer preference data, AI operations often require additional datasets from external sources. For instance, if your business is weather dependent, you might need access to climate and weather datasets covering all the regions where you operate.Starting with a view of the available data, you can begin to ask important questions:

- What can we do with this data?

- What can we learn from it?

- How do we prep and label each dataset for AI?

- What improvements can we make to an existing business use case or workflow using AI?

- What net-new use cases or workflows can we undertake?

- What types of data are needed to enable or augment this use case? Is this data we have or data we can get access to?

Data Infrastructure Strategy

Once you have a catalog of data assets and current or potential AI use cases, you can begin to design data infrastructure to address the resulting needs. It’s important to recognize that your data management needs will differ depending on the data types you are dealing with. You’ll need the ability to gather data on an ongoing basis for both AI training and inference, possibly in real or near-real time.Dataflows, Pipeline Sizing, and Data Sources

Managing dataflows is an important part of AI infrastructure design. Your data bandwidth and storage needs are a function of the number of edge endpoints you have and how much data is generated per endpoint. This may also determine whether you need to aggregate data regionally or move it directly to a central data lake.For example, in a recent blog I talked about the requirements for an autonomous driving project. The endpoints in that example are test cars capable of generating from 1-5TB of data per hour. It’s typical in that environment to aggregate data regionally before delivering it to a central data lake in that environment.

A more common example is a retail operation with multiple stores. You have to understand the data footprint of a single retail store and determine whether you need to aggregate data regionally.

It’s also important to assess your needs for file, block, and object storage. File systems are often used for image, video, and audio data files, while block storage is common for structured data such as databases, time-series, and log data. Object storage may be needed for dataflows associated with cloud native use cases and large, hybrid use cases.

Each data source is likely to have unique requirements for data prep and labeling in preparation for training or inference, and all data will need to be archived long-term for future training needs and reproducibility. Last week’s blog described the NetApp AI Control Plane, which can help integrate data management into your machine learning operations (MLOps) processes.

Where to Deploy

Another important consideration for your AI Center of Excellence is where to deploy, with options that include on premises, colocation facility, public cloud, or a hybrid. The most important principle is that compute and data should be together if at all possible.If your data is in the cloud, computing should be done there too. This has the advantage of giving you access to unlimited resources and the latest hardware, but cost can be an issue as operations grow.

Colocation is a good option if your existing datacenter can’t deliver the power and cooling necessary to support the latest high-performance equipment. Bearing in mind that your CoE likely needs to support diverse use cases and datasets, on-premises deployment often makes the most sense in the long run.

Data Science Strategy

Your data science strategy should be closely tied to your use cases and datasets:- Machine learning (ML) versus deep learning (DL). ML techniques are commonly applied to structured data, while DL is used for unstructured data (images, video, audio, text).

- Algorithm selection. Data scientists typically use an empirical process to choose the best algorithm for a problem, testing different models and parameters to achieve the best results in the shortest time. Software partners may offer a library of algorithms and semi-autonomous algorithm selection for the use case.

Operational Strategy

Part of the strategy for your AI Center of Excellence should include an understanding of who the stakeholders are and the model for engaging with them. Create a map of stakeholders and their projected needs in terms of:- Compute and data resources

- Data engineering and data science resources

- Can you ensure the success of cross-functional teams?

- Can you operate with a hybrid team with infrastructure controlled by central IT, while data engineering and data science reside in lines of business?

- Should you have a single team with diverse data science, data engineering, and infrastructure expertise?

Milestones and Metrics

As you move past the planning phases and begin to build out your Center of Excellence, it’s important to establish milestones for all necessary tasks while achieving as much parallelism as possible across different functions. The following table illustrates how this might breakdown across various functional teams. Note that I did not refer to DevOps earlier, but as AI moves from pilot to production there are integration and other DevOps functions that need to occur to operationalize trained models.| Functional Team | Tasks |

| Data Engineering |

|

| Infrastructure |

|

| Data Science |

|

| DevOps |

|

Finally, for each stage or milestone you should establish metrics to track your success. Be mindful of how you will evolve from one use case—or a few initial pilots—to multiple use cases across multiple lines of business, eventually expanding to include upstream and downstream vendors and partners, as your AI efforts progress from a crawl, to a walk, to a run.

Finding out More

Finding out More

You can learn more about building an AI Center of Excellence in the following GTC Digital on-demand session:

Session S22548. Unlocking Business Transformation with an AI Center of Excellence.

Speakers: Santosh Rao (NetApp) and Tony Paikeday (NVIDIA) Uncover the secrets for operationalizing AI across the enterprise by building a mature service that enables data science teams to build AI applications faster.More Information and Resources To learn more about the full range of NetApp AI solutions, visit netapp.com/ai. Check out these additional resources:

- NetApp AI Control Plane solution brief

- NetApp EF600 All-Flash Array with NVIDIA DGX SuperPOD solution brief

- NetApp ONTAP and Lenovo for Entry-Level AI/ML solution brief

- Operationalize Data Science at Scale with NetApp and Iguazio solution brief

- New NetApp Solutions Power Enterprise AI Needs

- Integrate Data Management into Your MLOps Processes with NetApp AI Control Plane

1Source: AI Global Survey 2019 (IDC, May 2019); Artificial Intelligence Global Adoption Trends and Strategies (IDC #US45120919, June 2019)

Santosh Rao

Santosh Rao is a Senior Technical Director and leads the AI & Data Engineering Full Stack Platform at NetApp. In this role, he is responsible for the technology architecture, execution and overall NetApp AI business. Santosh previously led the Data ONTAP technology innovation agenda for workloads and solutions ranging from NoSQL, big data, virtualization, enterprise apps and other 2nd and 3rd platform workloads. He has held a number of roles within NetApp and led the original ground up development of clustered ONTAP SAN for NetApp as well as a number of follow-on ONTAP SAN products for data migration, mobility, protection, virtualization, SLO management, app integration and all-flash SAN. Prior to joining NetApp, Santosh was a Master Technologist for HP and led the development of a number of storage and operating system technologies for HP, including development of their early generation products for a variety of storage and OS technologies.